- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

I have a very dumb cousin and it’s basically all they’ve been sharing lately.

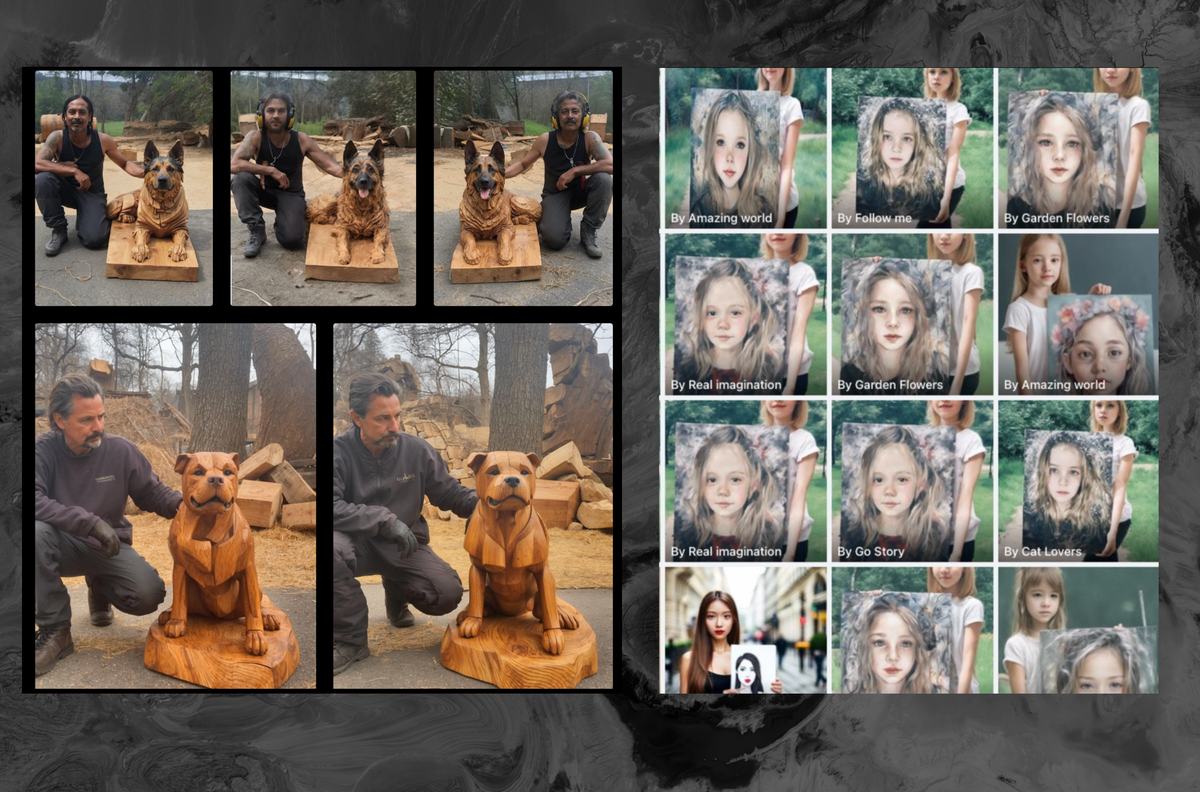

All of these images are AI-generated, and stolen from an artist named Michael Jones.

It absolutely is not “stolen” from Michael Jones.

He made, in real life, a wooden statue of a dog.

That certainly gives him no exclusive right to make images with a wooden statue of a dog. And he is definitely not the first person to do a carving of a dog in wood; dogs and humans have been around for a long time, and statues of dogs predate writing.

The problem that someone like Jones has isn’t that people are making images, but that Jones doesn’t have a great way to reliably prove that he created an actual statue; he’s just taking a picture of the thing. Once upon a time, that was a pretty good proof, because it was difficult to create such an image without having created a statue of a dog. Now, it’s not; a camera is no longer nearly as useful as a tool to prove that something exists in the real world.

So he’s got a technical problem, and there are ways to address that.

-

He could take a video – right now, we aren’t at a point where it’s easy to do a walkaround video, though I assume that we’ll get there.

-

He could get a trusted organization to certify that he made the statue, and reference them. If I’m linking to woodcarvers-international.org, then that’s not something that someone can replicate and claim that they created the thing in real life.

-

It might be possible to create cameras that create cryptographically-signed output, though that’s going to be technically-difficult to make in a way that can’t be compromised.

But in no case are we going to wind up in a world where people cannot make images of a wooden dog statue – or anything else – because it might make life more difficult for someone who has created a wooden dog to prove that they created that statue in real life.

Even if we rewind to before the advent of AI generated images, if someone were to take his photo of his art, and painstakingly use Photoshop to create a believable second image with a different person standing next to it representing it as their own without giving him any credit, we would call that process “stealing”.

-

What strikes me as being utterly pathetic is the people posting this AI-generated shit in order to have people praise “their” work. How empty their lives must be if the only ego-boost they can get is Facebook likes for something they’re lying about having made themselves.

My best guess is that they’re being posted by click bait farms to sell ads to people who view their pages… though I don’t know enough about Facebook to know if that would actually be possible.

I don’t want to believe that hundreds of actual people are independently stealing and making variants on this one artist’s work to get fake internet points…

I don’t want to believe that hundreds of actual people are independently stealing and making variants on this one artist’s work to get fake internet points…

The really sad thing is I can believe that there are hundreds of people that are doing this. I have encountered people who straight up stole other artists’ work and posted it, claiming they made it, in order to get fake internet points. I used to be a moderator on a site that had very strict rules about art theft - used to issue bans for it at least once a week. I can totally believe there are people on that site now using AI images in order to avoid detection.

I wonder how many of the comments are also “AI” whose job is to like and reply with some variation of “WOW! 😍”

It’s definitely for troll farming reasons. Most likely they’re using it to create legit-seeming accounts that they can then sell to a troll farm who will use it to influence a product or an election or something. Using AI to slightly vary content that they already know goes viral easily makes finding new content to share much cheaper.

I’m really surprised.

This is honestly the last thing I expected